In any non-trivial system, failure is inevitable and incidents happen. How we deal with the incidents aftermath determines if they just cost money or we gain something from them.

I’ve heard John Allspaw say a quote about incidents or outages, which fits perfectly:

Incidents are unplanned investments in your company’s survival.

So you have made this “investment”, everything is back up and running and now you should get as much value from it as possible. The first part of this process is conducting a post mortem.

Root cause fallacy

In many organizations, an after-incident analysis is called “root cause analysis” and therein lies a problem. It often leads to a result, that there was that one thing, which went wrong and if this one thing did not go wrong, the incident would not occur. That may well be true, but the issue with this approach is, there is usually more than just one factor that led to an incident and if we are satisfied with finding THE root cause, we miss a whole lot of potential issues.

I understand the management desire to simplify the incident, identify the Cause, fix it and move on. However, if we want to get maximum value for our money we already “spent” on the incident, we should not be satisfied with this.

The goal to find The Root Cause, together with people’s tendency to primarily look for external factors causing an incident, often ends up in finding the root cause to be a faulty hardware component or software bug in third party software. That way, there is someone external to blame, potentially sue, and everyone can go home worry-free. And because we already have the one true root cause, there is no need to investigate further.

If we are unable to find an external vendor to blame, the other popular tendency is to look for somebody internally who screwed up and discipline them. This is even worse than finding the external cause. Not only does it usually not solve anything, it actively prevents the company from learning from this incident and also any future ones. If finding the offender and punishing them becomes the norm, the primary concern of everyone involved in any later incident becomes “ass covering” and not successful and fast resolution. And that is the last thing you want in an engineering organization, or any organization for that matter.

It requires more work, time and commitment, but we can definitely gain much deeper understanding of how things truly work and fail, if we do things differently.

What does blameless even mean?

The term “blameless post-mortem” is not new and I certainly did not come up with it, but I still quite often encounter quizzical looks, when I speak about performing one.

Does it mean we let the people off the hook for being sloppy? That they can get away with doing poor job? Well, it’s a bit more complicated than that, but the simple answer is: Yes, sort of.

What? – Anarchy! Chaos!

You see, I have witnessed countless incidents. Some small, causing 5 minute website outage. Some big, resulting in losing few months of people’s e-mails in a medium sized organization. I cannot rule out that it might happen somewhere, but I have yet to see an incident caused by someone in the organization deliberately wanting to cause harm or knowingly sabotaging the company. So you should always assume good intent, unless proven otherwise.

Being at the source of the incident is extremely stressful for everyone. Different people deal differently with this stress. Someone goes green, someone goes numb, someone starts joking around. Try not to deal immediate judgement about people’s intent based on their appearance during the incident or shortly afterwards.

Also assume, that the people who “screwed up” usually know it before anyone else knows it. The feeling alone is sort of punishment in itself, sometimes severe, so there is no need to drive the point further. Quite to the contrary actually. People sometimes blame themselves, even in the situation where they were set up to fail by external factors and their action was the proverbial last drop. In such cases, they need encouragement rather than scathing remarks.

Blameless post mortem means, that you are not looking for someone to blame. Instead you are looking for knowledge, why and how your system failed and how you can do better in the future. Once you get rid of the blame game, you will see that a whole new stream of insight will open. People will be more honest with describing what and why they did, even if it was wrong in the hindsight. You want that. By removing the blame, you remove their incentive to hide facts or change the story they tell to hide important but unflattering facts.

Human error

There is another concept, often encountered during post-incident analyses. The mythical “human error”. You sometimes hear the question: “Was it equipment failure or human error?” If we don’t know any better, the afterthought then is

a) If it’s equipment failure either we cannot do anything about it, or maybe next year budget for better equipment;

b) If it’s “human error” find out who did it and punish them, or at least tell them to “pay more attention next time”.

This of course is utterly useless in increasing the overall resilience against any future failures.

The problem with this approach is, if you look hard enough, you can always end your analysis with “human error”. Even if it was the equipment failure, it can be considered “human error”. Either someone made a mistake during manufacturing, or someone made a poor choice selecting the particular equipment for the job, or it was not maintained properly. But the fact remains, that saying it was human error gives you absolutely nothing, except having someone to blame. And we already established, we are not looking for that.

So I suggest you simply remove the phrase “human error” from your vocabulary during the post mortem. Never be satisfied with “someone made a mistake”. Doing so opens up the discussion for the topics like:

- Do the people have proper training for the job?

- Do we have enough visibility into our systems? Are they observable?

- Do we have reasonable safeguards for potentially dangerous actions?

- Are we not overworking our people?

- Are we lacking redundancy somewhere?

- Are we not incentivising taking unnecessary risk with badly designed KPIs?

- … and so on

Practical guide

Even when the incident is still running, you can already start preparing for the post mortem analysis. If you are the manager, you are ideally suited for this. Instead of standing behind your people’s back and asking if it’s fixed yet, you should take notes and keep a timeline. Scribe down what people are yelling. Make notes of what you are seeing.

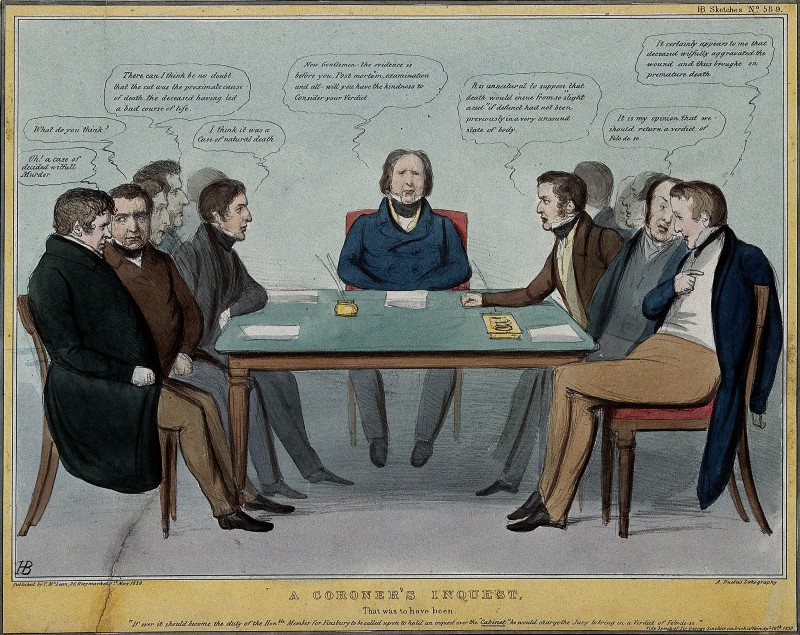

After the incident is resolved, let the people rest a bit, but don’t wait more than one or two days. Encourage everyone to take personal notes in the meantime. Schedule the post mortem meeting and have everyone who had anything to do with the incident in the same room or on the conference call. Select one person to be the “coroner” and task this person with leading the analysis and producing the report. This will take some time (could be even several days for complicated incidents), so make sure the coroner has enough available capacity to do that.

At the very beginning say out loud, that this will be a blameless post mortem and explain what it means. After that, let everyone tell their version of the story. Make sure everyone pays attention. If you do this the first couple of times, you will realize that many people will present very different stories, which means they have very different mental models of the system. That is inevitable in any sufficiently complex system, but conducting post mortems this way helps with aligning the mental models of various members of your team. It may seem like a waste of time, when developers have to listen to network administrators, how they debugged the packet fragmentation problem, but bear in mind that one of the primary outcomes of the post mortem is knowledge sharing.

Let the people describe:

- how they found out that something was wrong;

- what actions they took and when;

- where they looked for information and what they saw at the time;

- what assumptions they had, when they made certain choices;

- who they talked to and why, what they learned from it.

Write everything down and construct a detailed timeline of actions and conversations. Attach any relevant logs and chat room contents. It may actually take more than one session, or the coroner may setup further one-on-one sessions with involved people, if more detail is required.

When looking for the reasons the outage happened, try not to identify only the direct causes, but also look for contributing factors. Why did it take so long to find out about the problem? Why did it take so long to resolve? Why was the effect of the outage so large? Why did our tests not catch this? And so on.

The final report should contain at least the following sections:

- detailed timeline of events and actions;

- identified business impact (revenue lost, damage incurred, goodwill impact, …)

- identified causes and contributing factors (remember, no “human error”);

- resulting remediation tasks or at least areas of further analysis.

The last one might be tricky sometimes. Your management will primarily be interested in this section. “What are we doing, so that it does not happen again?” It should be OK to say “We don’t know yet”. Not everything can be solved immediately. Even though it might seem strange, sometimes the answer can also be “nothing”. It could be, that the post-mortem itself is the remediation action in cases we increase the knowledge and insight of the team. But most often, there is something that can be done to prevent similar incidents in the future.

It is not the intention of this article to describe every possible action you can take to increase the resilience of your system. Just make sure you do not limit yourself to technical measures. More often than not the problem lies in procedures, communication, training and incentivisation.

Even though most of us do not work on systems where people’s lives are at risk, it is beneficial to look at industries where they are and study how they deal with failure. The aviation and civil engineering industry can be a great source of inspiration. If the aviation industry’s reaction to flight crash caused by “pilot error” was telling pilots “to be more careful next time, or else …”, I think none of us would want to fly anymore.

Safety breeds safety

I recommend you watch this TED talk by Simon Sinek, about how great leaders make you feel safe. The same principles apply in post-mortems. If you make your people feel safe about talking openly about the incidents, without the fear of punishment, they will. If you create a safe environment for learning from mistakes, learning will happen. Many people will become even enthusiastic about improving the safety of your system. And this, in the end, is what you should really care about.

Comments